The Problem

Your Time Should Be Spent Making Music

We talked to hundreds of music producers. The frustration is universal.

Endless Sample Hunting

Hours lost scrolling through generic sample packs that never quite fit your vision.

Expensive Equipment

Professional recordings require gear most producers can't afford or don't have space for.

Broken Workflows

Switching between apps, exporting, importing—killing your creative momentum.

SoundBerry VST

Integrated in your DAW

Our Solution

AI That Works The Way You Do

SoundBerry lives inside your DAW. Generate exactly what you need, when you need it—without leaving your session.

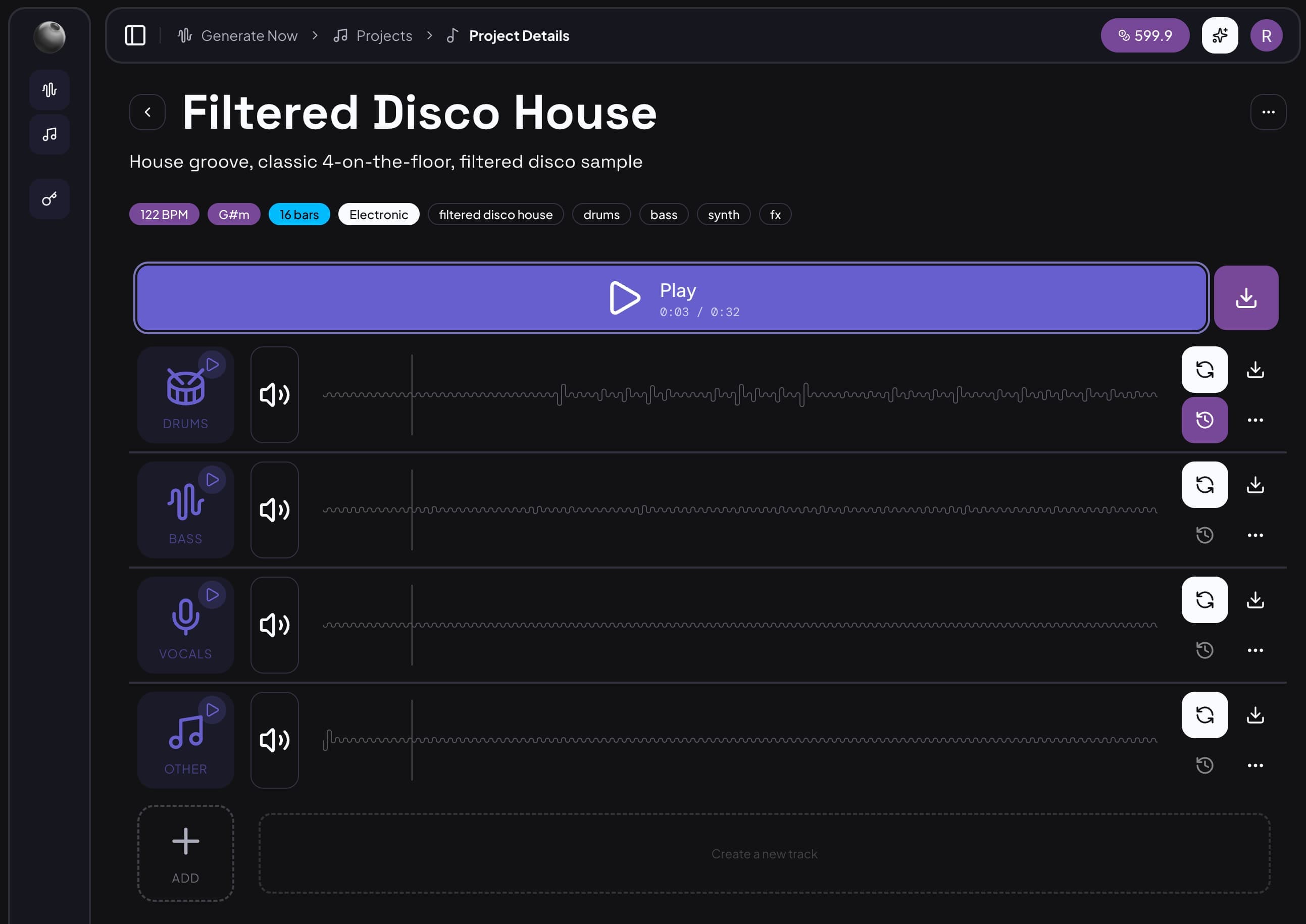

Custom Sounds, Instantly

Describe what you want or upload a reference. Get stems that match your track's key, tempo, and vibe.

DAW-Native Workflow

Our VST plugin means zero context switching. Generate, audition, and drop directly into your timeline.

Production-Ready Output

High-quality WAV stems, properly labeled and ready to mix. No post-processing needed.

Production Tools

Everything You Need to Create

Professional-grade AI tools designed for real production workflows. No gimmicks, just results.

#1

Stem Generation

Generate new stems that perfectly match your existing audio. Add bass, drums, or any instrument with AI precision.

#2

DAW Integration

Work directly in Ableton, Logic, FL Studio, or any VST3-compatible DAW. No exports, no interruptions.

#3

Beat Analysis

Automatically detect BPM, key, and optimal loop points. AI-powered analysis for perfect timing every time.

#4

Stem Separation

Split any track into individual stems—vocals, drums, bass, and more. Perfect isolation for remixing and sampling.

#5

Text to Music

Describe your vision in words. Our AI translates your ideas into production-ready audio.

Your Workflow

FromIdeatoTrackinMinutesFrom Idea to Track in Minutes

SoundBerry fits seamlessly into how you already work. No new apps to learn, no exports to manage.

Describe or Reference

Tell the AI what you need: 'dark synth bass, 120 BPM' or drop in a reference track to match.

AI Analyzes & Generates

Our models analyze harmony, rhythm, and texture to generate stems that actually fit your project.

Drag Into Your Session

Preview options, pick your favorite, and drop directly into your DAW timeline. Done.

Our Story

From Frustration to Innovation

We Built What We Wished Existed

As producers ourselves, we spent countless nights hunting for the perfect sound. We knew there had to be a better way.

“Every producer we spoke with had the same story: hours lost to sample libraries, creative momentum killed by broken workflows, great ideas abandoned because the right sound was always just out of reach.”

SoundBerry Team

Founders

So we built SoundBerry—not as another tool to learn, but as an extension of how producers already work. An AI assistant that lives in your DAW and understands what you're trying to create.